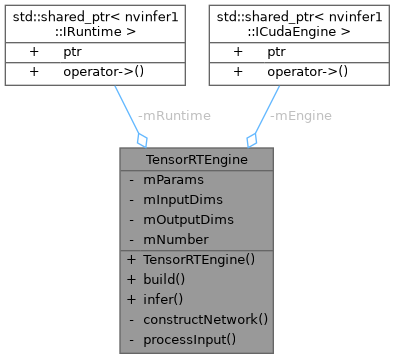

The TensorRTEngine class implements a generic TensorRT model. More...

#include <trt_engine_syh.hpp>

Public Member Functions | |

| TensorRTEngine (const samplesCommon::OnnxSampleParams ¶ms) | |

| bool | build () |

| Function builds the network engine. | |

| bool | infer () |

| Runs the TensorRT inference engine for this sample. | |

Private Member Functions | |

| bool | constructNetwork (SampleUniquePtr< nvinfer1::IBuilder > &builder, SampleUniquePtr< nvinfer1::INetworkDefinition > &network, SampleUniquePtr< nvinfer1::IBuilderConfig > &config, SampleUniquePtr< nvonnxparser::IParser > &parser) |

| Parses an ONNX model for MNIST and creates a TensorRT network. | |

| bool | processInput (float *filedata, const samplesCommon::BufferManager &buffers) |

| Reads the input and stores the result in a managed buffer. | |

Private Attributes | |

| samplesCommon::OnnxSampleParams | mParams |

| The parameters for the sample. | |

| nvinfer1::Dims | mInputDims |

| The dimensions of the input to the network. | |

| nvinfer1::Dims | mOutputDims |

| The dimensions of the output to the network. | |

| int | mNumber {0} |

| The number to classify. | |

| std::shared_ptr< nvinfer1::IRuntime > | mRuntime |

| The TensorRT runtime used to deserialize the engine. | |

| std::shared_ptr< nvinfer1::ICudaEngine > | mEngine |

| The TensorRT engine used to run the network. | |

The TensorRTEngine class implements a generic TensorRT model.

It creates the network using an ONNX model

Definition at line 54 of file trt_engine_syh.hpp.

|

inline |

Definition at line 57 of file trt_engine_syh.hpp.

| bool TensorRTEngine::build | ( | ) |

Function builds the network engine.

Creates the network, configures the builder and creates the network engine.

This function creates the Onnx MNIST network by parsing the Onnx model and builds the engine that will be used to run MNIST (mEngine)

Definition at line 101 of file trt_engine_syh.hpp.

References constructNetwork(), mEngine, mInputDims, mOutputDims, and mRuntime.

Referenced by main().

|

private |

Parses an ONNX model for MNIST and creates a TensorRT network.

Uses a ONNX parser to create the Onnx MNIST Network and marks the output layers.

| network | Pointer to the network that will be populated with the Onnx MNIST network |

| builder | Pointer to the engine builder |

Definition at line 179 of file trt_engine_syh.hpp.

References locateFile(), and mParams.

Referenced by build().

| bool TensorRTEngine::infer | ( | ) |

Runs the TensorRT inference engine for this sample.

This function is the main execution function of the sample. It allocates the buffer, sets inputs and executes the engine.

Definition at line 215 of file trt_engine_syh.hpp.

References mEngine, mParams, and processInput().

Referenced by main().

|

private |

Reads the input and stores the result in a managed buffer.

Definition at line 263 of file trt_engine_syh.hpp.

References mInputDims, and mParams.

Referenced by infer().

|

private |

The TensorRT engine used to run the network.

Definition at line 77 of file trt_engine_syh.hpp.

|

private |

The dimensions of the input to the network.

Definition at line 72 of file trt_engine_syh.hpp.

Referenced by build(), and processInput().

|

private |

The number to classify.

Definition at line 74 of file trt_engine_syh.hpp.

|

private |

The dimensions of the output to the network.

Definition at line 73 of file trt_engine_syh.hpp.

Referenced by build().

|

private |

The parameters for the sample.

Definition at line 70 of file trt_engine_syh.hpp.

Referenced by constructNetwork(), infer(), and processInput().

|

private |

The TensorRT runtime used to deserialize the engine.

Definition at line 76 of file trt_engine_syh.hpp.

Referenced by build().